In Part 1, I compared the performance and cost of Intel Cascade Lake versus AMD Rome. How does Amazon’s own CPU, Graviton, fare in this benchmark?

If you’re reading this, you probably know that Amazon has developed its own Arm-based CPU for AWS workloads. This all started in earnest in early 2015, when it acquired Annapurna Labs. The first CPU, now called Graviton1, went into production late in 2018. A good description of some of the history is in this article. Graviton1 is admittedly somewhat underpowered compared to other server chips, supporting just 16 vCPUs (cores, or threads) and being, in some benchmarks, just twice as fast as a Raspberry Pi 3. But, it did signal AWS’s intent to move away from x86 in the datacenter, and probably convinced some ecosystem partners to commit to development who might otherwise have been skeptical.

And just last month (about one year after the announcement of Graviton1), Amazon announced Graviton2. This looks to be a serious upgrade to Graviton1, and maybe a threat to the Intel and AMD x86 Instances that are available within AWS. They come in three main categories: general purpose (M6g), compute optimized (C6g), and memory optimized (R6g); and they claim up to 40% improved price/performance over current generation M5 (General Purpose, Intel Skylake), C5 (Compute Optimized, either Intel Cascade Lake or Skylake), and R5 (Memory Optimized, Intel Skylake) Instances.

As soon as I saw the announcement of Graviton2, it piqued my interest to benchmark it for my Yocto OpenBMC builds. C6g and R6g are still works-in-progress, and not launched yet; and M6g is available but only under Preview. And, M6g is probably closest to the M5a (AMD Rome) and M5n (Intel Cascade Lake) benchmarks that I’ve done in Part 1, so I applied for Preview, and am awaiting a response.

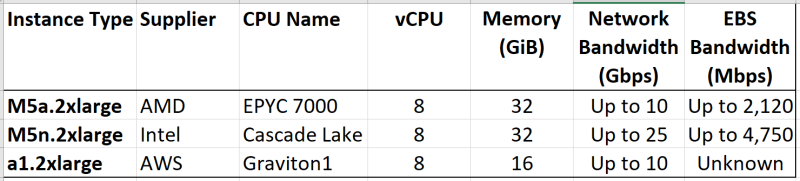

But in the meantime, I thought that I could benchmark Graviton1, if only to acquaint myself with the AWS Arm environment, and see any differences in the Yocto build environment between x86 and Arm. So, I fired up an a1.2xlarge Instance, and added its characteristics to my benchmarking table:

You can see again that it’s not an apples-to-apples comparison. Although I could match up the number of vCPUs (essentially the number of threads), memory available in the different configurations is not the same; and AWS doesn’t specify the EBS Bandwidth. But, it’s a learning experience, so I decided to proceed anyway.

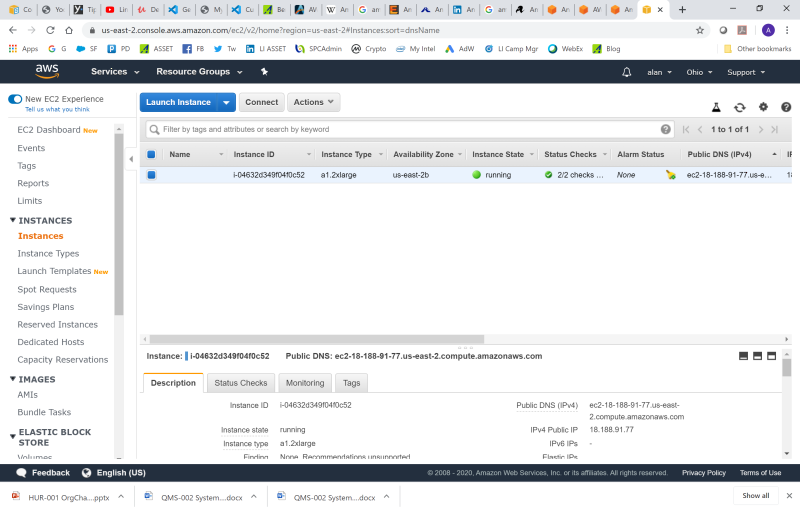

Setting up a Graviton1 Instance is just as easy as an x86 Instance. Just click on “Launch Instance”, specify the Amazon Machine Image (AMI) and the Instance Type, make sure you have enough disk space for the Yocto build (I used 70 GiB), and log in:

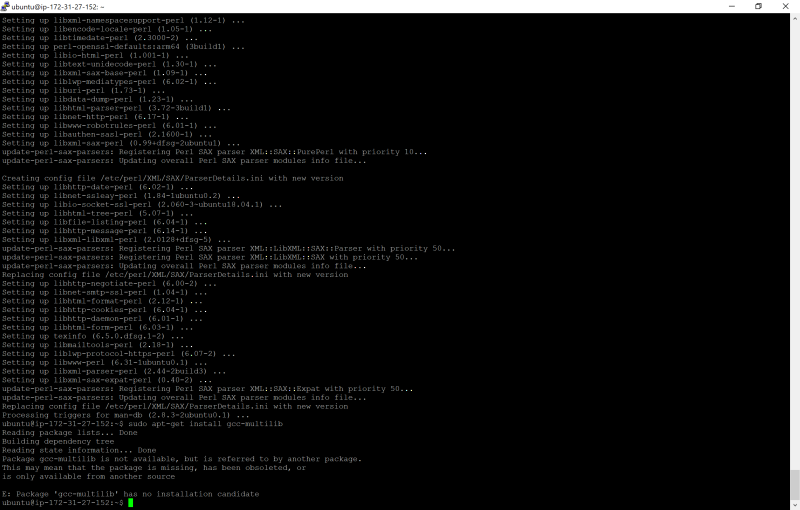

This is where the x86 and the Arm approaches diverged a little, and required that I do some homework. To set up the Yocto build environment, you need to install the prerequisite packages:

$ sudo apt-get update

$ sudo apt-get upgrade

$ sudo apt-get install gawk wget git-core diffstat unzip texinfo gcc-multilib \

build-essential chrpath socat cpio python python3 python3-pip python3-pexpect \

xz-utils debianutils iputils-ping python3-git python3-jinja2 libegl1-mesa libsdl1.2-dev \

pylint3 xterm

Unfortunately, the apt-get install failed on gcc-multilib, with the error “E: Package ‘gcc-multilib’ has no installation candidate”:

I found, through some research, that I had to replace “gcc-multilib” with “gcc-7-multilib-arm-linux-gnueabi” to get this to work. Whew!

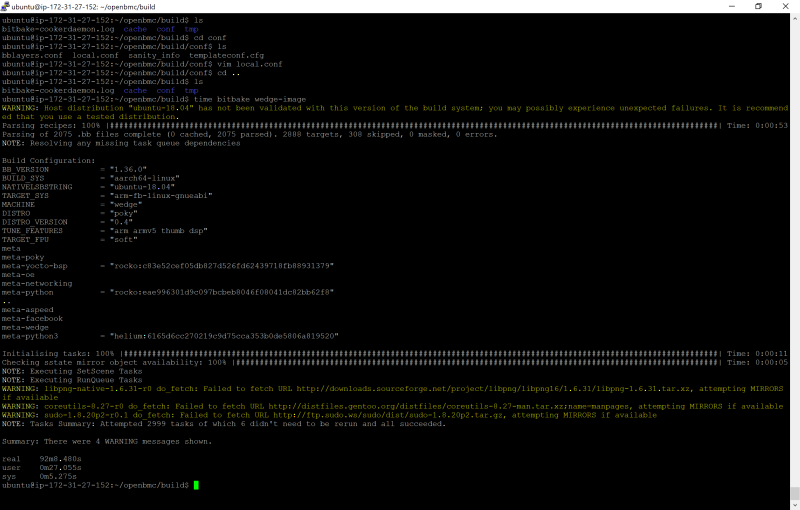

But, then, I got another error after starting the bitbake, “ERROR: Uninative selected but not configured correctly, please set UNINATIVE_CHECKSUM[aarch64]”.

I got around this by adding to the file conf/local.conf:

INHERIT_remove = “uninative”

Then, the build succeeded:

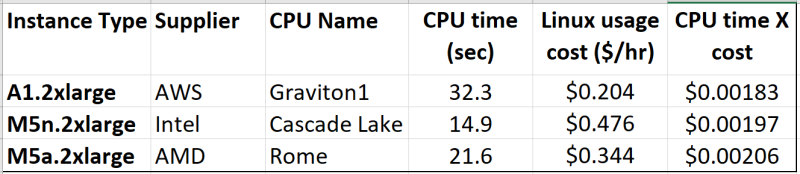

So, for the finale, here is the summary table:

The results are fascinating. Although Graviton1 is significantly slower than Cascade Lake or Rome, its cost is much lower, so the amount of money I’m spending is lowest overall (although note that doing these runs is pretty cheap).

Are there sources of error in this benchmarking exercise? Certainly. I could not get all the platforms consistent in terms of vCPU, Memory, Network Bandwidth, and EBS Bandwidth. And by eliminating the uninative requirement in the Arm64 build, am I possibly artificially reducing the build time? That is an exercise for a future blog, perhaps.

Nonetheless, the results are impressive, at least for this workload. I’m looking forward to getting access to the Graviton2 Instance, so I can see how much faster it is.