I was asked recently whether engineers could just check the CRC error counts coming from the Operating System to ensure they had good

signal integrity and operating margins. After all, a CRC checks for bit errors,

right? Here’s why this is not good enough:

We’re all aware that signal integrity (SI) has gained in

importance as bus speeds have increased. With I/O technologies such as PCIe

Gen3, SATA 3, USB 3.0, Intel® Quickpath Interconnect, XLAUI and

others all at 5.0 GT/s and faster, design defects and/or silicon and board

manufacturing variances all contribute to a reduction in the operating margins

on today’s board designs. Many of these technologies use schemes such as

encoding, scrambling, and adaptive equalization to “open the eyes” on the

buses, but the effects of jitter, inter-symbol interference (ISI), crosstalk

and other impairments must be simulated and tested for.

Let’s look at PCIe Gen3 for a moment. It’s an AC-coupled, differential

bus which uses embedded clocking to provide a robust and survivable data path.

It uses 128b/130b encoding and data scrambling to avoid long strings of

consecutive individual bits (CIDs) – these long strings are the bane of signal

integrity, as they dramatically impact the clock recovery (CR) circuits’

ability to lock and hold. Encoding and scrambling also have the benefit of

achieving DC balance in the bit stream, reducing data wander and improving

error recovery.

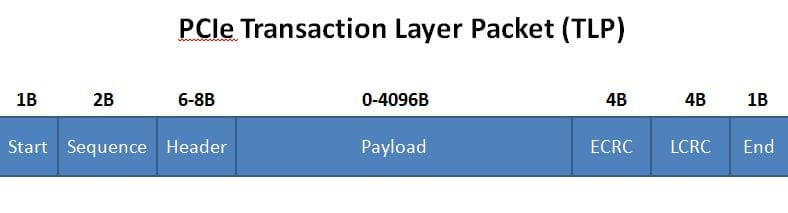

PCI Express encapsulates its data within a TLP (Transaction

Layer Packet) which contains a CRC (Cyclical Redundancy Code) which “protects”

the entire packet (with the exception of the framing start/end bytes). A TLP looks like this:

For PCIe 3, the link CRC (LCRC) is 32 bits wide based on

the large, variable-sized payload. The end-to-end CRC (ECRC) provides some

level of data integrity for different link hops. For other buses like QPI,

which use a smaller, fixed-sized payload, the link CRC is 8 bits.

Now that we’ve covered that background, let’s look at the

four reasons why CRC checking is inadequate, versus pattern-based checking:

1. It takes a long time to detect failures at

nominal voltage and time.

PCIe Gen3 runs at roughly 8 Gbps and is rated within the

PCI-SIG specification for one bit error in 1012. When I say

“rated”, this means that at nominal voltage and time, BER should be below this

rate. This is because the signaling schemes across all serial buses are never

guaranteed to deliver the bits perfectly across the interconnects. The physical

layer is always designed to minimize the probability of incorrect transmission

and/or reception of a bit, not down to zero, but down to the rated BER. Below

this BER, the physical layer allow for routine transmission errors to occur, and

recovery mechanisms in the link layer are employed so that the higher level

functions are not aware of or affected by these errors.

So given that, to see errors above the BER threshold, you

have to run traffic for a long time. The confidence level in the bus is given

by the equation in our whitepaper Platform

Validation using Intel Interconnect Built-In Self Test (Intel IBIST). For a

bus like QPI, which is rated at 1 in 1014, achieving a high

confidence level can take days or weeks. Engineers don’t have weeks to test

signal integrity given today’s aggressive design delivery schedules.

2. It doesn’t give the design’s true margins under

real-world conditions.

When system-level OS-based testing using CRCs is being used,

the test is usually performed at nominal time and voltage, and when the design

is “fresh” – that is, under “perfect” conditions. But we’ve seen that Process/

Voltage/ Temperature (PVT) effects can result in a wide swing in margins. That’s why

silicon vendors bin their devices based upon their performance at the fringe of

the envelope. And drift in high-speed I/O circuits – aging of capacitors,

variations in power supplies, the effect of current leakage on gates over time,

etc. – will also negatively impact

operating margins. So just testing a board design under ideal conditions may

mislead you into thinking that signal integrity is OK.

Testing margins with a worst-case extreme stress synthetic

pattern which maps to an eye mask that takes drift and PVT effects into account

will address this.

3. CRC is not perfect

CRCs use polynomial arithmetic to create a checksum against

the data it is intended to protect. The design of the CRC polynomial depends on

the maximum total length of the block to be protected (data + CRC bits), the

desired error protection features, and the type of resources for implementing

the CRC, as well as the desired performance. Trade-offs between the above are

quite common. For example, a typical PCI Express 3.0 packet CRC polynomial is:

x32 + x26 + x23 +

x22 + x16 + x11 + x10 + x8 + x7 + x5 + x4 + x2 + x + 1

Whereas for Ethernet frames, the CRC generator may use the

following polynomial:

x32 + x26 + x23 + x22

+ x16 + x12 + x11 + x10 + x8 + x7 + x5 + x4 + x2 + x + 1

The PCI Express 3.0 CRC-32 for the TLP LCRC will detect 1-bit,

2-bit, and 3-bit errors. 4-bit errors may escape detection. Bit slips or adds

have no guarantee of detection. “Burst” errors of 32 bits or less will likely be

detected.

For QPI, the 8-bit CRC can detect the following within flits:

- All

1b, 2b, and 3b errors - Any

odd number of bit errors - All

bit errors of burst length 8 or less- Burst

length refers to the number of contiguous bits in error in the payload

being checked (i.e. ‘1xxxxxx1’).

- Burst

- 99% of

all errors with burst length 9 - 99.6%

of all errors of burst length > 9

4. OS-based traffic CRC checking doesn’t really

stress much

Most CRC-based tests saturate the bus with heavy OS-based

traffic, i.e. streaming video. This can get bus traffic up over 90% or so. This

normal functional traffic is subject to 128b/130b encoding and scrambling which

reduces the occurrences of long strings of 31 consecutive identical bits (CIDs)

to down below 10-12, which is the BER threshold. But stressing clock

recovery (CR) circuits requires checking these with longer CIDs. Just running

traffic and checking CRCs doesn’t cut it.

“Synthetic” or “killer” patterns are necessary to aggravate

all reasonably likely ISI (intersymbol interference), challenge the ability of

clock recovery circuits to lock and hold, and check receiver circuitry against

drift. A PRBS31 pattern fulfills these criteria. More detail on PRBS31 is

available here.

The intent is to generate the most stressful patterns as possible, then check

the bits one-by-one. It doesn’t get more precise than that.

So after all this, let’s ask, why is this important? Well, obviously,

bad signal integrity in a design runs the high risk of uncorrectable errors or

system crashes, resulting in costly field repairs or even product recalls. Also,

let’s not forget power consumption: if SI is not optimized, any adaptive

equalization can increase power requirements by 15% – 30%. So if your signal

integrity is bad…

SI only gets worse over time. All systems run slower and

eventually start to hang or crash over time. You want your design to run clean when

it is first shipped.