As network functions become virtualized and proprietary hardware migrates to commodity Intel-based servers, will Carrier service levels degrade?

For telecom service providers, outage downtime is extremely expensive. According to a study done by Heavy Reading, mobile service providers spend $15 billion annually dealing with network outages and degradations. This cost includes, but is not limited to, penalties from Service Level Agreements (SLAs), subscriber churn, and the direct material and people cost of bringing systems back online, diagnosing the root cause and taking subsequent preventative measures.

Historically, voice and data processing applications could provide 99.999% (also known as “five-nines”) uptime, provided the underlying hardware and software platform was reliable to 99.9999%, or six-nines. This was accomplished through the use of proprietary fault-tolerant and match-synced hardware, operating systems, and control and data forwarding kernels. This approach began in the 1970s, and continued through the early 1990s.

In the ‘90s, enterprise networks began the migration of select parts of their networks towards what is now in today’s context Software-Defined Networking (SDN). The essence of SDN involves the separation of control and forwarding networking functions, the centralization of that control, and the rapid provision of new services and network control through a well-defined set of interfaces. Many network elements, such as Voice over IP PBXs, moved to commodity Intel-based platforms to accommodate this. The success of this evolution eventually paved the way for the movement towards open hardware and software in the data center – for example, Facebook’s Open Compute Project, with some of its subsidiary specifications, such as OpenBMC (OPC), a Baseboard Management Controller open software stack, and the Switch Abstraction Interface (SAI), a standardized ‘C’ API to program ASICs, among many others. The Open Compute Project supports server, storage and telecom platforms – Facebook and others have saved billions of dollars of capex and opex due to these initiatives.

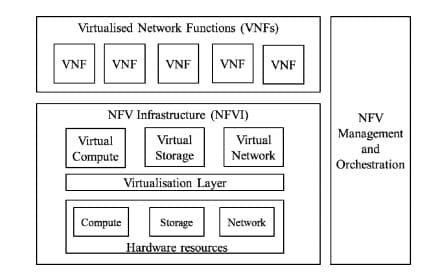

As this open standardized approach evolves, it is inevitable that it will make its way into telecom service provider data centers and networks. The pace of deployment is slow, due in part to the enormous capital involved, and the very stringent requirements for high availability characteristic of this industry. But the European Telecommunications Standard Institute (ETSI), has pioneered this movement, and there are now more than 30 proof-of-concept trials underway. One of the key elements for successful deployment is Network Functions Virtualization (NFV), an overall block diagram of which can be seen in the NFV Architectural Framework document:

I will write more in subsequent blogs about how the hardware, software and firmware interactions within such systems pose enormous technical challenges to system performance, reliability and availability. But the focus recently does seem to have shifted away from solely base functionality and performance over to reliability and availability. This is a necessary step for this technology to make its way into telecom provider networks. High availability is a function of both how quickly a system can be restored to service, and how quickly the root cause of a failure can be diagnosed. To achieve fast problem diagnosis, these commercial off-the-shelf hardware platforms need to be enhanced with some of the system management capabilities that were on the proprietary hardware platforms of 30 years ago. In particular, the ability to do hardware, JTAG-assisted debug and test were inherent in such proprietary systems. ASSET’s offering in this space on BMCs and the Intel Innovation Engine can be seen here: SED Technical Brief.